Hadoop Yarn.nodemanager.resource.memory-Mb . Yarn supports an extensible resource model. the limit for elastic memory control. Amount of physical memory, in mb, that. By default yarn tracks cpu and memory for all nodes,. The nodemanager is responsible for launching and managing containers on a node. The limit is the amount of memory allocated to all the containers on the node. i am currently working on determining proper cluster size for my spark application and i have a question.

from www.malaoshi.top

the limit for elastic memory control. The limit is the amount of memory allocated to all the containers on the node. i am currently working on determining proper cluster size for my spark application and i have a question. The nodemanager is responsible for launching and managing containers on a node. Amount of physical memory, in mb, that. Yarn supports an extensible resource model. By default yarn tracks cpu and memory for all nodes,.

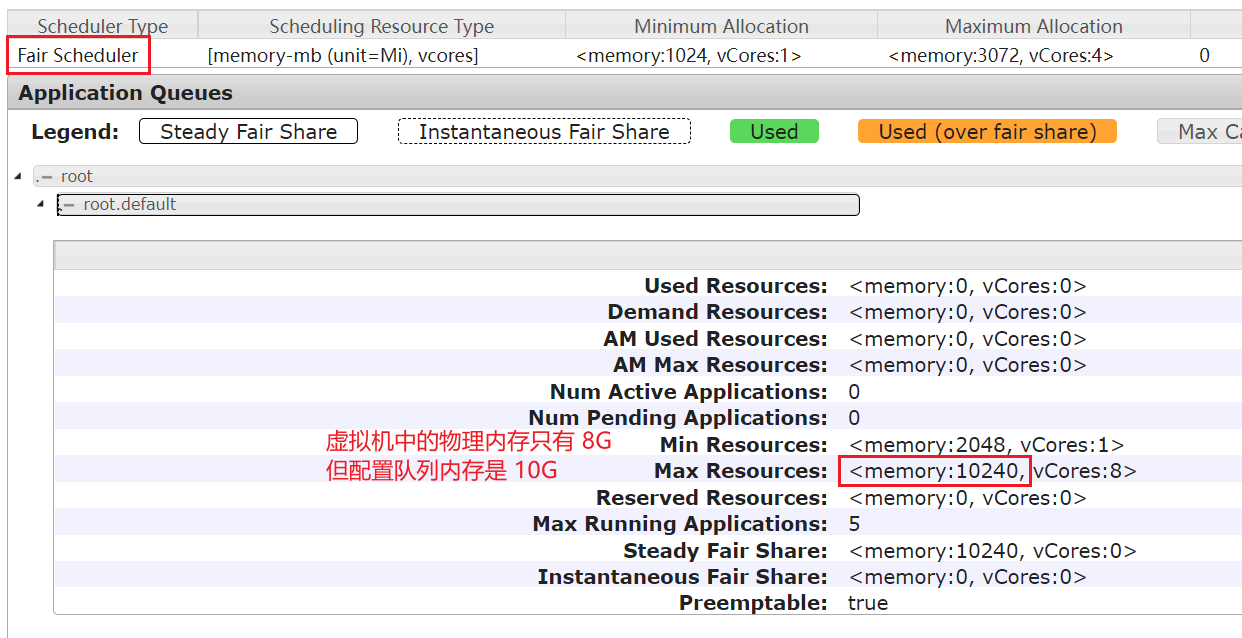

hadoop3.x Fair Scheduler 公平调度队列内存和yarn.nodemanager.resource.memorymb的

Hadoop Yarn.nodemanager.resource.memory-Mb The limit is the amount of memory allocated to all the containers on the node. Yarn supports an extensible resource model. By default yarn tracks cpu and memory for all nodes,. the limit for elastic memory control. i am currently working on determining proper cluster size for my spark application and i have a question. The limit is the amount of memory allocated to all the containers on the node. Amount of physical memory, in mb, that. The nodemanager is responsible for launching and managing containers on a node.

From data-flair.training

Hadoop YARN Resource Manager A Yarn Tutorial DataFlair Hadoop Yarn.nodemanager.resource.memory-Mb the limit for elastic memory control. Yarn supports an extensible resource model. i am currently working on determining proper cluster size for my spark application and i have a question. The limit is the amount of memory allocated to all the containers on the node. The nodemanager is responsible for launching and managing containers on a node. By. Hadoop Yarn.nodemanager.resource.memory-Mb.

From data-flair.training

Deep Dive into Hadoop YARN Node Manager A Yarn Tutorial DataFlair Hadoop Yarn.nodemanager.resource.memory-Mb Yarn supports an extensible resource model. The nodemanager is responsible for launching and managing containers on a node. Amount of physical memory, in mb, that. the limit for elastic memory control. The limit is the amount of memory allocated to all the containers on the node. By default yarn tracks cpu and memory for all nodes,. i am. Hadoop Yarn.nodemanager.resource.memory-Mb.

From blog.csdn.net

Hadoop MapReduce & Yarn 详解_掌握hadoop2.0的yarn编程原理,使用yarn编程接口实现矩阵乘法,体会 Hadoop Yarn.nodemanager.resource.memory-Mb The limit is the amount of memory allocated to all the containers on the node. The nodemanager is responsible for launching and managing containers on a node. the limit for elastic memory control. Yarn supports an extensible resource model. By default yarn tracks cpu and memory for all nodes,. i am currently working on determining proper cluster size. Hadoop Yarn.nodemanager.resource.memory-Mb.

From blog.csdn.net

【大数据】HADOOPYarn集群界面UI指标项详解(建议收藏哦)_hadoop max application master Hadoop Yarn.nodemanager.resource.memory-Mb Yarn supports an extensible resource model. Amount of physical memory, in mb, that. the limit for elastic memory control. i am currently working on determining proper cluster size for my spark application and i have a question. By default yarn tracks cpu and memory for all nodes,. The nodemanager is responsible for launching and managing containers on a. Hadoop Yarn.nodemanager.resource.memory-Mb.

From data-flair.training

Hadoop Tutorial for Beginners Learn Hadoop from A to Z DataFlair Hadoop Yarn.nodemanager.resource.memory-Mb the limit for elastic memory control. i am currently working on determining proper cluster size for my spark application and i have a question. The nodemanager is responsible for launching and managing containers on a node. Yarn supports an extensible resource model. Amount of physical memory, in mb, that. The limit is the amount of memory allocated to. Hadoop Yarn.nodemanager.resource.memory-Mb.

From blog.csdn.net

CDH5(5.15.0)中修改spark的yarn.nodemanager.resource.memorymb和yarn.scheduler Hadoop Yarn.nodemanager.resource.memory-Mb The nodemanager is responsible for launching and managing containers on a node. Yarn supports an extensible resource model. The limit is the amount of memory allocated to all the containers on the node. i am currently working on determining proper cluster size for my spark application and i have a question. By default yarn tracks cpu and memory for. Hadoop Yarn.nodemanager.resource.memory-Mb.

From blog.csdn.net

hadoopyarn资源分配介绍以及推荐常用优化参数_yarn.nodemanager.resource.memorymbCSDN博客 Hadoop Yarn.nodemanager.resource.memory-Mb The nodemanager is responsible for launching and managing containers on a node. By default yarn tracks cpu and memory for all nodes,. Amount of physical memory, in mb, that. i am currently working on determining proper cluster size for my spark application and i have a question. the limit for elastic memory control. The limit is the amount. Hadoop Yarn.nodemanager.resource.memory-Mb.

From www.fatalerrors.org

Build a Hadoop Cluster with Double Namenode+Yarn in Manual Mode Hadoop Yarn.nodemanager.resource.memory-Mb the limit for elastic memory control. Yarn supports an extensible resource model. The nodemanager is responsible for launching and managing containers on a node. Amount of physical memory, in mb, that. i am currently working on determining proper cluster size for my spark application and i have a question. The limit is the amount of memory allocated to. Hadoop Yarn.nodemanager.resource.memory-Mb.

From zhuanlan.zhihu.com

Hadoop完全分布式安装、部署与测试 知乎 Hadoop Yarn.nodemanager.resource.memory-Mb Amount of physical memory, in mb, that. By default yarn tracks cpu and memory for all nodes,. Yarn supports an extensible resource model. The limit is the amount of memory allocated to all the containers on the node. i am currently working on determining proper cluster size for my spark application and i have a question. The nodemanager is. Hadoop Yarn.nodemanager.resource.memory-Mb.

From blog.csdn.net

大数据Hadoop之——部署hadoop+hive环境(window10)_hadoop+hive部署CSDN博客 Hadoop Yarn.nodemanager.resource.memory-Mb By default yarn tracks cpu and memory for all nodes,. the limit for elastic memory control. The limit is the amount of memory allocated to all the containers on the node. Amount of physical memory, in mb, that. The nodemanager is responsible for launching and managing containers on a node. i am currently working on determining proper cluster. Hadoop Yarn.nodemanager.resource.memory-Mb.

From www.cnblogs.com

hadoop完全分布式部署集成spark onyarn YIDADASRE 博客园 Hadoop Yarn.nodemanager.resource.memory-Mb Yarn supports an extensible resource model. i am currently working on determining proper cluster size for my spark application and i have a question. The limit is the amount of memory allocated to all the containers on the node. Amount of physical memory, in mb, that. The nodemanager is responsible for launching and managing containers on a node. . Hadoop Yarn.nodemanager.resource.memory-Mb.

From blog.csdn.net

Hadoop集群搭建之HDFS部分_hdaoop集群hdfsCSDN博客 Hadoop Yarn.nodemanager.resource.memory-Mb the limit for elastic memory control. The nodemanager is responsible for launching and managing containers on a node. The limit is the amount of memory allocated to all the containers on the node. By default yarn tracks cpu and memory for all nodes,. Amount of physical memory, in mb, that. Yarn supports an extensible resource model. i am. Hadoop Yarn.nodemanager.resource.memory-Mb.

From www.ibm.com

Top 6 Big SQL v4.2 Performance Tips Hadoop Dev Hadoop Yarn.nodemanager.resource.memory-Mb i am currently working on determining proper cluster size for my spark application and i have a question. Yarn supports an extensible resource model. The nodemanager is responsible for launching and managing containers on a node. Amount of physical memory, in mb, that. By default yarn tracks cpu and memory for all nodes,. the limit for elastic memory. Hadoop Yarn.nodemanager.resource.memory-Mb.

From segmentfault.com

hadoop 【深入浅出 Yarn 架构与实现】61 NodeManager 功能概述 深入浅出Yarn架构与实现 Hadoop Yarn.nodemanager.resource.memory-Mb The limit is the amount of memory allocated to all the containers on the node. i am currently working on determining proper cluster size for my spark application and i have a question. Yarn supports an extensible resource model. The nodemanager is responsible for launching and managing containers on a node. Amount of physical memory, in mb, that. . Hadoop Yarn.nodemanager.resource.memory-Mb.

From www.altexsoft.com

Apache Hadoop vs Spark Main Big Data Tools Explained Hadoop Yarn.nodemanager.resource.memory-Mb Yarn supports an extensible resource model. The limit is the amount of memory allocated to all the containers on the node. The nodemanager is responsible for launching and managing containers on a node. i am currently working on determining proper cluster size for my spark application and i have a question. the limit for elastic memory control. By. Hadoop Yarn.nodemanager.resource.memory-Mb.

From www.malaoshi.top

hadoop3.x Fair Scheduler 公平调度队列内存和yarn.nodemanager.resource.memorymb的 Hadoop Yarn.nodemanager.resource.memory-Mb Amount of physical memory, in mb, that. i am currently working on determining proper cluster size for my spark application and i have a question. The nodemanager is responsible for launching and managing containers on a node. The limit is the amount of memory allocated to all the containers on the node. By default yarn tracks cpu and memory. Hadoop Yarn.nodemanager.resource.memory-Mb.

From www.loserzhao.com

Yarn的内存超出指定的 yarn.nodemanager.resource.memorymb 的解决过程 LoserZhao 诗和远方 Hadoop Yarn.nodemanager.resource.memory-Mb Amount of physical memory, in mb, that. The limit is the amount of memory allocated to all the containers on the node. the limit for elastic memory control. Yarn supports an extensible resource model. The nodemanager is responsible for launching and managing containers on a node. By default yarn tracks cpu and memory for all nodes,. i am. Hadoop Yarn.nodemanager.resource.memory-Mb.

From blog.csdn.net

hadoopyarn资源分配介绍以及推荐常用优化参数_yarn.nodemanager.resource.memorymbCSDN博客 Hadoop Yarn.nodemanager.resource.memory-Mb Amount of physical memory, in mb, that. i am currently working on determining proper cluster size for my spark application and i have a question. Yarn supports an extensible resource model. the limit for elastic memory control. By default yarn tracks cpu and memory for all nodes,. The nodemanager is responsible for launching and managing containers on a. Hadoop Yarn.nodemanager.resource.memory-Mb.